from dsc80_utils import *

# Pandas Tutor setup

!pip install pandas-tutor

%reload_ext pandas_tutor

%set_pandas_tutor_options {"maxDisplayCols": 8, "nohover": True, "projectorMode": True}

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

Announcements 📣¶

- Lab 1 resubmission due tonight (optional).

- Project 1 checkpoint due Saturday.

- Lab 2 due Monday.

Agenda¶

- Pivot tables.

- Distributions.

- Simpson's paradox.

- Merging.

- Many-to-one & many-to-many joins.

- Transforming.

- The price of

apply.

- The price of

Question 🤔

Find the most popular Male and Female baby Name for each Year in baby. Exclude Years where there were fewer than 1 million births recorded.

baby_path = Path('data') / 'baby.csv'

baby = pd.read_csv(baby_path)

baby

Pivot tables¶

Pivot tables: an extension of grouping¶

Pivot tables are a compact way to display tables for humans to read:

| Sex | F | M |

|---|---|---|

| Year | ||

| 2018 | 1698373 | 1813377 |

| 2019 | 1675139 | 1790682 |

| 2020 | 1612393 | 1721588 |

| 2021 | 1635800 | 1743913 |

| 2022 | 1628730 | 1733166 |

- Notice that each value in the table is a sum over the counts, split by year and sex.

- You can think of pivot tables as grouping using two columns, then "pivoting" one of the group labels into columns.

pivot_table¶

The pivot_table (not pivot!) DataFrame method aggregates a DataFrame using two columns. To use it:

df.pivot_table(index=index_col,

columns=columns_col,

values=values_col,

aggfunc=func)

The resulting DataFrame will have:

- One row for every unique value in

index_col. - One column for every unique value in

columns_col. - Values determined by applying

funcon values invalues_col.

last_5_years = baby.query('Year >= 2018')

last_5_years

last_5_years.pivot_table(

index='Year',

columns='Sex',

values='Count',

aggfunc='sum',

)

# Look at the similarity to the snippet above!

(last_5_years

.groupby(['Year', 'Sex'])

[['Count']]

.sum()

)

Question 🤔

Use .pivot_table to find the number of penguins per 'island' and 'species'.

penguins = sns.load_dataset('penguins').dropna()

penguins

Note that there is a NaN at the intersection of 'Biscoe' and 'Chinstrap', because there were no Chinstrap penguins on Biscoe Island.

We can either use the fillna method afterwards or the fill_value argument to fill in NaNs.

Distributions¶

Example: Penguins¶

Let's start by using the pivot_table method to recreate the DataFrame shown below.

| sex | Female | Male |

|---|---|---|

| species | ||

| Adelie | 73 | 73 |

| Chinstrap | 34 | 34 |

| Gentoo | 58 | 61 |

Joint distribution¶

When using aggfunc='count', a pivot table describes the joint distribution of two categorical variables. This is also called a contingency table.

counts = penguins.pivot_table(

index='species',

columns='sex',

values='body_mass_g',

aggfunc='size',

fill_value=0,

)

counts

We can normalize the DataFrame by dividing by the total number of penguins. The resulting numbers can be interpreted as probabilities that a randomly selected penguin from the dataset belongs to a given combination of species and sex.

# How to calculate the total number of penguins?

Marginal probabilities¶

If we sum over one of the axes, we can compute marginal probabilities, i.e. unconditional probabilities.

joint = counts/counts.sum().sum()

joint

# .sum(axis=0) sums by compressing rows, which computes column sums

joint.sum(axis=0)

joint.sum(axis=1)

For instance, the second Series tells us that a randomly selected penguin has a 0.36 chance of being of species 'Gentoo'.

Conditional probabilities¶

Using counts, how might we compute conditional probabilities like $$P(\text{species } = \text{"Adelie"} \mid \text{sex } = \text{"Female"})?$$

counts

$$\begin{align*} P(\text{species} = c \mid \text{sex} = x) &= \frac{\# \: (\text{species} = c \text{ and } \text{sex} = x)}{\# \: (\text{sex} = x)} \end{align*}$$

➡️ Click here to see more of a derivation.

$$\begin{align*} P(\text{species} = c \mid \text{sex} = x) &= \frac{P(\text{species} = c \text{ and } \text{sex} = x)}{P(\text{sex = }x)} \\ &= \frac{\frac{\# \: (\text{species } = \: c \text{ and } \text{sex } = \: x)}{N}}{\frac{\# \: (\text{sex } = \: x)}{N}} \\ &= \frac{\# \: (\text{species} = c \text{ and } \text{sex} = x)}{\# \: (\text{sex} = x)} \end{align*}$$Conditional probabilities¶

To find conditional probabilities of 'species' given 'sex', divide by column sums. To find conditional probabilities of 'sex' given 'species', divide by row sums.

counts.sum(axis=0)

The conditional distribution of 'species' given 'sex' is below. Note that in this new DataFrame, the 'Female' and 'Male' columns each sum to 1.

counts / counts.sum(axis=0)

For instance, the above DataFrame tells us that the probability that a randomly selected penguin is of 'species' 'Adelie' given that they are of 'sex' 'Female' is 0.442424.

Question 🤔

Find the conditional distribution of 'sex' given 'species'.

Hint: Use .T.

Example: Grades¶

Two students, Lisa and Bart, just finished their first year at UCSD. They both took a different number of classes in Fall, Winter, and Spring.

Each quarter, Lisa had a higher GPA than Bart.

But Bart has a higher overall GPA.

How is this possible? 🤔

Grade points¶

The number of "grade points" earned for a course is:

$$\text{grade points} = \text{number of units} \cdot \text{grade point per unit (out of 4)}$$

Run this cell to create a DataFrame showing Lisa and Bart's grades.

simpsons = pd.DataFrame({'Lisa Units': [20, 18, 5],

'Lisa Grade Points': [46.0, 54.0, 20.0],

'Lisa GPA': [46/20, 54/18, 20/5],

'Bart Units': [5, 5, 22],

'Bart Grade Points': [10, 13.5, 82.5],

'Bart GPA': [10/5, 13.5/5, 82.5/22],

},

index=['Fall', 'Winter', 'Spring'])

simpsons

Lisa had a higher GPA in all three quarters.

Question 🤔

Compute Lisa's overall GPA and Bart's overall GPA.

simpsons

simpsons['Lisa Grade Points'].sum()/simpsons['Lisa Units'].sum()

simpsons['Bart Grade Points'].sum()/simpsons['Bart Units'].sum()

What happened?¶

simpsons

When Lisa and Bart both performed poorly, Lisa took more units than Bart. This brought down 📉 Lisa's overall average.

When Lisa and Bart both performed well, Bart took more units than Lisa. This brought up 📈 Bart's overall average.

Simpson's paradox¶

Simpson's paradox occurs when grouped data and ungrouped data show opposing trends.

- It is named after Edward H. Simpson, not Lisa or Bart Simpson.

It often happens because there is a hidden factor (i.e. a confounder) within the data that influences results.

Question: What is the "correct" way to summarize your data? What if you had to act on these results?

What happened?¶

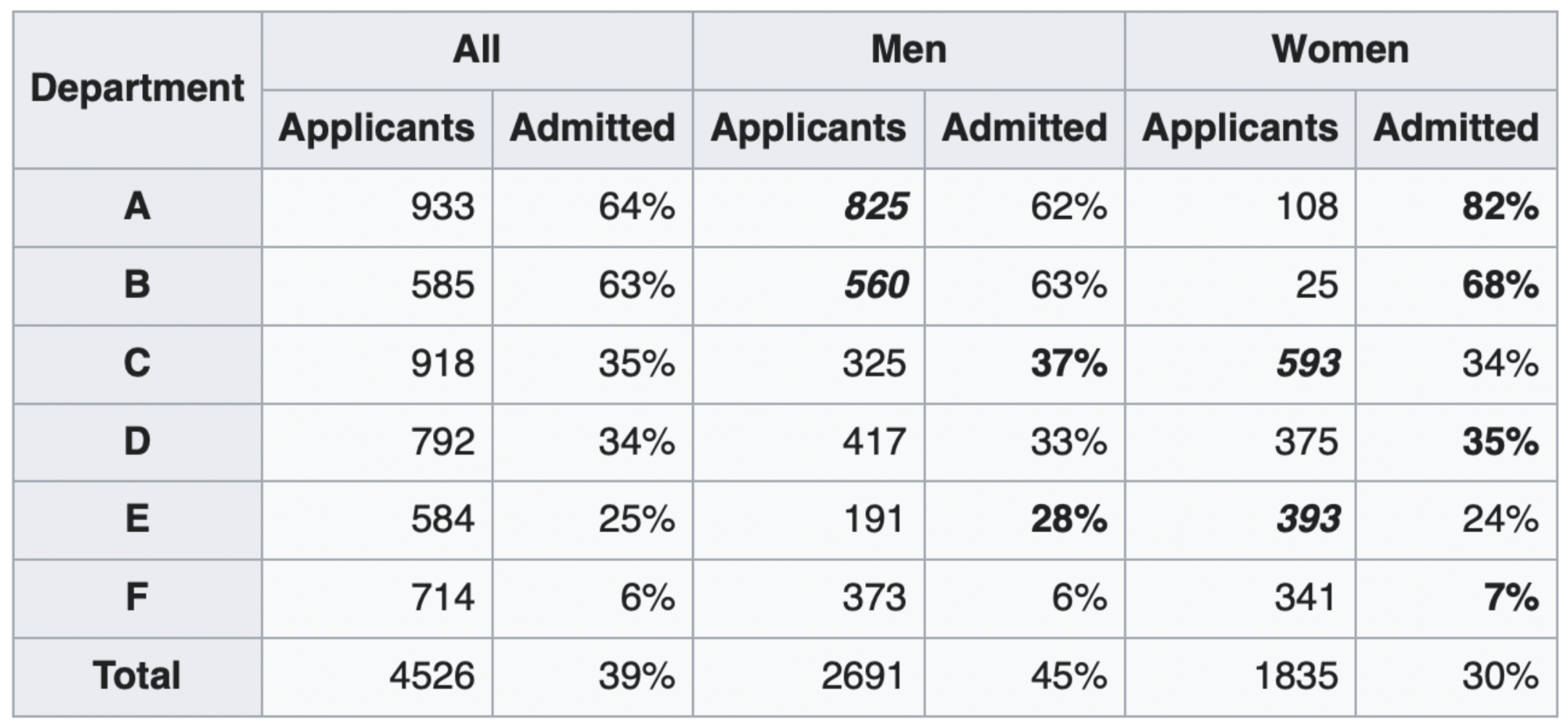

The overall acceptance rate for women (30%) was lower than it was for men (45%).

However, most departments (A, B, D, F) had a higher acceptance rate for women.

Department A had a 62% acceptance rate for men and an 82% acceptance rate for women!

- 31% of men applied to Department A.

- 6% of women applied to Department A.

Department F had a 6% acceptance rate for men and a 7% acceptance rate for women!

- 14% of men applied to Department F.

- 19% of women applied to Department F.

Conclusion: Women tended to apply to departments with a lower acceptance rate; the data don't support the claim that there was major gender discrimination against women.

Takeaways¶

Be skeptical of...

- Aggregate statistics.

- People misusing statistics to "prove" that discrimination doesn't exist.

- Drawing conclusions from individual publications ($p$-hacking, publication bias, narrow focus, etc.).

- And more!

We need to apply domain knowledge and human judgement calls to decide what to do when Simpson's paradox is present.

Really?¶

To handle Simpson's paradox with rigor, we need some ideas from causal inference which we don't have time to cover in DSC 80. This video has a good example of how to approach Simpson's paradox using a minimal amount of causal inference, if you're curious (not required for DSC 80).

IFrame('https://www.youtube-nocookie.com/embed/zeuW1Z2EtLs?si=l2Dl7P-5RCq3ODpo',

width=800, height=450)

Further reading¶

- Gender Bias in Admission Statistics?

- Contains a great visualization, but seems to be paywalled now.

- What is Simpson's Paradox?

- Understanding Simpson's Paradox

- Requires more statistics background, but gives a rigorous understanding of when to use aggregated vs. unaggregated data.

Merging¶

Example: Name categories¶

The New York Times article from Lecture 1 claims that certain categories of names are becoming more popular. For example:

Forbidden names like Lucifer, Lilith, Kali, and Danger.

Evangelical names like Amen, Savior, Canaan, and Creed.

Mythological names like Julius, Hera, and Nyx.

It also claims that baby boomer names like Susan and Karen are becoming less popular.

Let's see if we can verify these claims using data!

Loading in the data¶

The baby DataFrame has one row for every combination of 'Name', 'Sex', and 'Year'.

baby

Our second DataFrame, nyt, contains the names mentioned in the article and their categorization.

nyt_path = Path('data') / 'nyt_names.csv'

nyt = pd.read_csv(nyt_path)

nyt

Issue: To find the number of babies born with (for example) forbidden names each year, we need to combine information from both baby and nyt.

Merging¶

- We want to link rows from

babyandnyttogether whenever the names match up. - This is a merge (

pandasterm), i.e. a join (SQL term). - A merge is appropriate when we have two sources of information about the same individuals that is linked by a common column(s).

- The common column(s) are called the join key.

Example merge¶

Let's demonstrate on a small subset of baby and nyt.

nyt_small = nyt.iloc[[11, 12, 14]].reset_index(drop=True)

names_to_keep = ['Julius', 'Karen', 'Noah']

baby_small = (baby

.query("Year == 2020 and Name in @names_to_keep")

.reset_index(drop=True)

)

dfs_side_by_side(baby_small, nyt_small)

baby_small.merge(nyt_small, left_on='Name', right_on='nyt_name')

The merge method¶

The

mergeDataFrame method joins two DataFrames by columns or indexes.- As mentioned before, "merge" is just the

pandasword for "join."

- As mentioned before, "merge" is just the

When using the

mergemethod, the DataFrame beforemergeis the "left" DataFrame, and the DataFrame passed intomergeis the "right" DataFrame.- In

baby_small.merge(nyt_small),baby_smallis considered the "left" DataFrame andnyt_smallis the "right" DataFrame; the columns from the left DataFrame appear to the left of the columns from right DataFrame.

- In

By default:

- If join keys are not specified, all shared columns between the two DataFrames are used.

- The "type" of join performed is an inner join. This is the only type of join you saw in DSC 10, but there are more, as we'll now see!

Join types: inner joins¶

%%pt

baby_small.merge(nyt_small, left_on='Name', right_on='nyt_name')

- Note that

'Noah'and'Freya'do not appear in the merged DataFrame. - This is because there is:

- no

'Noah'in the right DataFrame (nyt_small), and - no

'Freya'in the left DataFrame (baby_small).

- no

- The default type of join that

mergeperforms is an inner join, which keeps the intersection of the join keys.

Different join types¶

We can change the type of join performed by changing the how argument in merge. Let's experiment!

%%pt

# Note the NaNs!

baby_small.merge(nyt_small, left_on='Name', right_on='nyt_name', how='left')

%%pt

baby_small.merge(nyt_small, left_on='Name', right_on='nyt_name', how='right')

%%pt

baby_small.merge(nyt_small, left_on='Name', right_on='nyt_name', how='outer')

Different join types handle mismatches differently¶

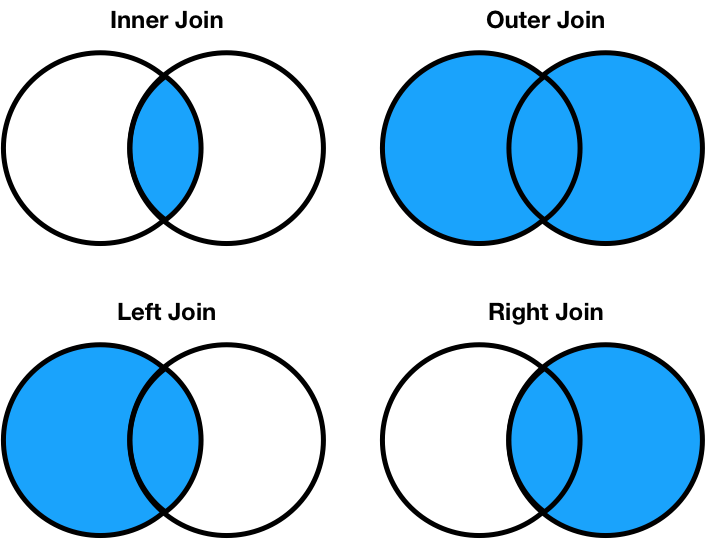

There are four types of joins.

- Inner: keep only matching keys (intersection).

- Outer: keep all keys in both DataFrames (union).

- Left: keep all keys in the left DataFrame, whether or not they are in the right DataFrame.

- Right: keep all keys in the right DataFrame, whether or not they are in the left DataFrame.

- Note that

a.merge(b, how='left')contains the same information asb.merge(a, how='right'), just in a different order.

- Note that

Notes on the merge method¶

mergeis flexible – you can merge using a combination of columns, or the index of the DataFrame.- If the two DataFrames have the same column names,

pandaswill add_xand_yto the duplicated column names to avoid having columns with the same name (change these using thesuffixesargument). - There is, in fact, a

joinmethod, but it's actually a wrapper aroundmergewith fewer options. - As always, the documentation is your friend!

Lots of pandas operations do an implicit outer join!¶

pandaswill almost always try to match up index values using an outer join.- It won't tell you that it's doing an outer join, it'll just throw

NaNs in your result!

df1 = pd.DataFrame({'a': [1, 2, 3]}, index=['hello', 'dsc80', 'students'])

df2 = pd.DataFrame({'b': [10, 20, 30]}, index=['dsc80', 'is', 'awesome'])

dfs_side_by_side(df1, df2)

df1['a'] + df2['b']

Many-to-one & many-to-many joins¶

One-to-one joins¶

- One-to-one joins are joins where neither the left DataFrame nor the right DataFrame contain any duplicates in the join key.

- What if there are duplicated join keys, in one or both of the DataFrames we are merging?

# Run this cell to set up the next example.

profs = pd.DataFrame(

[['Sam', 'UCSD', 5],

['Janine', 'UCSD', 8],

['Marina', 'UIC', 7],

['Justin', 'OSU', 5],

['Soohyun', 'UCSD', 2],

['Suraj', 'UCB', 2]],

columns=['Name', 'School', 'Years']

)

schools = pd.DataFrame({

'Abbr': ['UCSD', 'UCLA', 'UCB', 'UIC'],

'Full': ['University of California San Diego', 'University of California, Los Angeles', 'University of California, Berkeley', 'University of Illinois Chicago']

})

programs = pd.DataFrame({

'uni': ['UCSD', 'UCSD', 'UCSD', 'UCB', 'OSU', 'OSU'],

'dept': ['Math', 'HDSI', 'COGS', 'CS', 'Math', 'CS'],

'grad_students': [205, 54, 281, 439, 304, 193]

})

Many-to-one joins¶

- Many-to-one joins are joins where one of the DataFrames contains duplicate values in the join key.

- The resulting DataFrame will preserve those duplicate entries as appropriate.

dfs_side_by_side(profs, schools)

%%pt

profs.merge(schools, left_on='School', right_on='Abbr', how='left')

Many-to-many joins¶

Many-to-many joins are joins where both DataFrames have duplicate values in the join key.

dfs_side_by_side(profs, programs)

Before running the following cell, try predicting the number of rows in the output.

%%pt

profs.merge(programs, left_on='School', right_on='uni', how='inner')

mergestitched together every UCSD row inprofswith every UCSD row inprograms.- Since there were 3 UCSD rows in

profsand 3 inprograms, there are $3 \cdot 3 = 9$ UCSD rows in the output. The same applies for all other schools.

Question 🤔

Fill in the blank so that the last statement evaluates to True.

df = profs.merge(programs, left_on='School', right_on='uni')

df.shape[0] == (____).sum()

Don't use merge (or join) in your solution!

dfs_side_by_side(profs, programs)

Returning back to our original question¶

Let's find the popularity of baby name categories over time. To start, we'll define a DataFrame that has one row for every combination of 'category' and 'Year'.

category_counts = (

baby

.merge(nyt, left_on='Name', right_on='nyt_name')

.groupby(['category', 'Year'])

['Count']

.sum()

.reset_index()

)

category_counts

These plots come from the DataFrame above and show trends over time in different name categories.

Transforming¶

Transforming values¶

A transformation results from performing some operation on every element in a sequence, e.g. a Series.

While we haven't discussed it yet in DSC 80, you learned how to transform Series in DSC 10, using the

applymethod.applyis very flexible – it takes in a function, which itself takes in a single value as input and returns a single value.

baby

def number_of_vowels(string):

return sum(c in 'aeiou' for c in string.lower())

baby['Name'].apply(number_of_vowels)

# Built-in functions work with apply, too.

baby['Name'].apply(len)

The price of apply¶

Unfortunately, apply runs really slowly!

%%timeit

baby['Name'].apply(number_of_vowels)

%%timeit

res = []

for name in baby['Name']:

res.append(number_of_vowels(name))

Internally, apply actually just runs a for-loop!

So, when possible – say, when applying arithmetic operations – we should work on Series objects directly and avoid apply!

# Rounds down to the nearest multiple of 10.

baby['Year'] // 10 * 10

# Does the same, but slower.

baby['Year'].apply(lambda y: y // 10 * 10)

The .str accessor¶

For string operations, pandas provides a convenient .str accessor.

%%timeit

baby['Name'].str.len()

%%timeit

baby['Name'].apply(len)

It's very convenient and runs at about the same speed as apply.

Other data representations¶

Representations of tabular data¶

In DSC 80, we work with DataFrames in

pandas.- When we say

pandasDataFrame, we're talking about thepandasAPI for its DataFrame objects.- API stands for "application programming interface." We'll learn about these more soon.

- When we say "DataFrame", we're referring to a general way to represent data (rows and columns, with labels for both rows and columns).

- When we say

There many other ways to work with data tables!

- Examples: R data frames, SQL databases, spreadsheets, or even matrices from linear algebra.

- When you learn SQL in DSC 100, you'll find many similaries (e.g. slicing columns, filtering rows, grouping, joining, etc.).

- Relational algebra captures common data operations between many data table systems.

Why use DataFrames over something else?

DataFrames vs. spreadsheets¶

- DataFrames give us a data lineage: the code records down data changes. Not so in spreadsheets!

- Using a general-purpose programming language gives us the ability to handle much larger datasets, and we can use distributed computing systems to handle massive datasets.

DataFrames vs. matrices¶

\begin{split} \begin{aligned} \mathbf{X} = \begin{bmatrix} 1 & 0 \\ 0 & 4 \\ 0 & 0 \\ \end{bmatrix} \end{aligned} \end{split}

- Matrices are mathematical objects. They only hold numbers, but have many useful properties (which you've learned about in your linear algebra class, Math 18).

- Often, we process data from a DataFrame into matrix format for machine learning models. You saw this a bit in DSC 40A, and we'll see this more in DSC 80 in a few weeks.

DataFrames vs. relations¶

- Relations are the data representation for relational database systems (e.g. MySQL, PostgreSQL, etc.).

- You'll learn all about these in DSC 100.

- Database systems are much better than DataFrames at storing many large data tables and handling concurrency (many people reading and writing data at the same time).

- Common workflow: load a subset of data in from a database system into

pandas, then make a plot. - Or: load and clean data in

pandas, then store it in a database system for others to use.

Summary¶

- There is no "formula" to automatically resolve Simpson's paradox! Domain knowledge is important.

- We've covered most of the primary DataFrame operations: subsetting, aggregating, joining, and transforming.

Next time¶

Data cleaning: applying what we've already learned to real-world, messy data!