from dsc80_utils import *

Agenda 📆¶

- Text features.

- Bag of words.

- Cosine similarity.

- TF-IDF.

- Example: State of the Union addresses 🎤.

Text features¶

Review: Regression and features¶

- In DSC 40A, our running example was to use regression to predict a data scientist's salary, given their GPA, years of experience, and years of education.

- After minimizing empirical risk to determine optimal parameters, $w_0^*, \dots, w_3^*$, we made predictions using:

$$\text{predicted salary} = w_0^* + w_1^* \cdot \text{GPA} + w_2^* \cdot \text{experience} + w_3^* \cdot \text{education}$$

- GPA, years of experience, and years of education are features – they represent a data scientist as a vector of numbers.

- e.g. Your feature vector may be [3.5, 1, 7].

- This approach requires features to be numeric.

Moving forward¶

Suppose we'd like to predict the sentiment of a piece of text from 1 to 10.

- 10: Very positive (happy).

- 1: Very negative (sad, angry).

Example:

Input: "DSC 80 is a pretty good class."

Output: 7.

We can frame this as a regression problem, but we can't directly use what we learned in 40A, because here our inputs are text, not numbers.

Text features¶

- Big question: How do we represent a text document as a feature vector of numbers?

- If we can do this, we can:

- use a text document as input in a regression or classification model (in a few lectures).

- quantify the similarity of two text documents (today).

Example: San Diego employee salaries¶

- Transparent California publishes the salaries of all City of San Diego employees.

- Let's look at the 2022 data.

salaries = pd.read_csv('https://transcal.s3.amazonaws.com/public/export/san-diego-2022.csv')

salaries['Employee Name'] = salaries['Employee Name'].str.split().str[0] + ' Xxxx'

salaries.head()

| Employee Name | Job Title | Base Pay | Overtime Pay | ... | Year | Notes | Agency | Status | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | Mara Xxxx | City Attorney | 227441.53 | 0.00 | ... | 2022 | NaN | San Diego | FT |

| 1 | Todd Xxxx | Mayor | 227441.53 | 0.00 | ... | 2022 | NaN | San Diego | FT |

| 2 | Terence Xxxx | Assistant Police Chief | 227224.32 | 0.00 | ... | 2022 | NaN | San Diego | FT |

| 3 | Esmeralda Xxxx | Police Sergeant | 124604.40 | 162506.54 | ... | 2022 | NaN | San Diego | FT |

| 4 | Marcelle Xxxx | Assistant Retirement Administrator | 279868.04 | 0.00 | ... | 2022 | NaN | San Diego | FT |

5 rows × 13 columns

Aside on privacy and ethics¶

- Even though the data we downloaded is publicly available, employee names still correspond to real people.

- Be careful when dealing with PII (personably identifiable information).

- Only work with the data that is needed for your analysis.

- Even when data is public, people have a reasonable right to privacy.

- Remember to think about the impacts of your work outside of your Jupyter Notebook.

Goal: Quantifying similarity¶

- Our goal is to describe, numerically, how similar two job titles are.

- For instance, our similarity metric should tell us that

'Deputy Fire Chief'and'Fire Battalion Chief'are more similar than'Deputy Fire Chief'and'City Attorney'.

- Idea: Two job titles are similar if they contain shared words, regardless of order. So, to measure the similarity between two job titles, we could count the number of words they share in common.

- Before we do this, we need to be confident that the job titles are clean and consistent – let's explore.

Exploring job titles¶

jobtitles = salaries['Job Title']

jobtitles.head()

0 City Attorney 1 Mayor 2 Assistant Police Chief 3 Police Sergeant 4 Assistant Retirement Administrator Name: Job Title, dtype: object

How many employees are in the dataset? How many unique job titles are there?

jobtitles.shape[0], jobtitles.nunique()

(12831, 611)

What are the most common job titles?

jobtitles.value_counts().iloc[:10]

Job Title

Police Officer Ii 1082

Police Sergeant 311

Fire Fighter Ii 306

...

Associate Engineer - Civil 254

Fire Captain 225

Program Manager 216

Name: count, Length: 10, dtype: int64

Are there any missing job titles?

jobtitles.isna().sum()

0

Fortunately, no.

Canonicalization¶

Remember, our goal is ultimately to count the number of shared words between job titles. But before we start counting the number of shared words, we need to consider the following:

- Some job titles may have punctuation, like

'-'and'&', which may count as words when they shouldn't.'Assistant - Manager'and'Assistant Manager'should count as the same job title.

- Some job titles may have "glue" words, like

'to'and'the', which (we can argue) also shouldn't count as words.'Assistant To The Manager'and'Assistant Manager'should count as the same job title.

- If we just want to focus on the titles themselves, then perhaps roman numerals should be removed: that is,

'Police Officer Ii'and'Police Officer I'should count as the same job title.

Let's address the above issues. The process of converting job titles so that they are always represented the same way is called canonicalization.

Punctuation¶

Are there job titles with unnecessary punctuation that we can remove?

To find out, we can write a regular expression that looks for characters other than letters, numbers, and spaces.

We can use regular expressions with the

.strmethods we learned earlier in the quarter just by usingregex=True.

# Uses character class negation.

jobtitles.str.contains(r'[^A-Za-z0-9 ]', regex=True).sum()

922

jobtitles[jobtitles.str.contains(r'[^A-Za-z0-9 ]', regex=True)].head()

137 Park & Recreation Director 248 Associate Engineer - Mechanical 734 Associate Engineer - Electrical 882 Associate Engineer - Traffic 1045 Associate Engineer - Civil Name: Job Title, dtype: object

It seems like we should replace these pieces of punctuation with a single space.

"Glue" words¶

Are there job titles with "glue" words in the middle, such as 'Assistant To The Manager'?

To figure out if any titles contain the word 'to', we can't just do the following, because it will evaluate to True for job titles that have 'to' anywhere in them, even if not as a standalone word.

# Why are we converting to lowercase?

jobtitles.str.lower().str.contains('to').sum()

1577

Instead, we need to look for 'to' separated by word boundaries.

jobtitles.str.lower().str.contains(r'\bto\b', regex=True).sum()

10

jobtitles[jobtitles.str.lower().str.contains(r'\bto\b', regex=True)]

1638 Assistant To The Chief Operating Officer

2183 Principal Assistant To City Attorney

2238 Assistant To The Director

...

6594 Confidential Secretary To Chief Operating Officer

6832 Confidential Secretary To Mayor

11028 Confidential Secretary To Mayor

Name: Job Title, Length: 10, dtype: object

We can look for other filler words too, like 'the' and 'for'.

jobtitles[jobtitles.str.lower().str.contains(r'\bthe\b', regex=True)]

1638 Assistant To The Chief Operating Officer 2238 Assistant To The Director 5609 Assistant To The Director 6544 Assistant To The Director Name: Job Title, dtype: object

jobtitles[jobtitles.str.lower().str.contains(r'\bfor\b', regex=True)]

3449 Assistant For Community Outreach 6889 Assistant For Community Outreach 10810 Assistant For Community Outreach Name: Job Title, dtype: object

We should probably remove these "glue" words.

Roman numerals (e.g. "Ii")¶

Lastly, let's try to identify job titles that have roman numerals at the end, like 'i' (1), 'ii' (2), 'iii' (3), or 'iv' (4). As before, we'll convert to lowercase first.

jobtitles[jobtitles.str.lower().str.contains(r'\bi+v?\b', regex=True)]

5 Police Officer Ii

10 Police Officer Ii

48 Fire Prevention Inspector Ii

...

12822 Clerical Assistant Ii

12828 Police Officer Ii

12830 Police Officer I

Name: Job Title, Length: 6087, dtype: object

Let's get rid of those numbers, too.

Fixing punctuation and removing "glue" words and roman numerals¶

Let's put the preceeding three steps together and canonicalize job titles by:

- converting to lowercase,

- removing each occurrence of

'to','the', and'for', - replacing each character that is not a letter, digit, or space with a single space,

- replacing each sequence of roman numerals – either

'i','ii','iii', or'iv'at the end with nothing, and - replacing each sequence of multiple spaces with a single space.

jobtitles = (

jobtitles

.str.lower()

.str.replace(r'\bto\b|\bthe\b\|bfor\b', '', regex=True)

.str.replace(r'[^A-Za-z0-9 ]', ' ', regex=True)

.str.replace(r'\bi+v?\b', '', regex=True)

.str.replace(r' +', ' ', regex=True) # ' +' matches 1 or more occurrences of a space.

.str.strip() # Removes leading/trailing spaces if present.

)

jobtitles.sample(5)

11815 lifeguard 12439 fire recruit 11354 recreation leader 12014 library assistant 1879 deputy director Name: Job Title, dtype: object

(jobtitles == 'police officer').sum()

1378

Bag of words 💰¶

Text similarity¶

Recall, our idea is to measure the similarity of two job titles by counting the number of shared words between the job titles. How do we actually do that, for all of the job titles we have?

A counts matrix¶

Let's create a "counts" matrix, such that:

- there is 1 row per job title,

- there is 1 column per unique word that is used in job titles, and

- the value in row

titleand columnwordis the number of occurrences ofwordintitle.

Such a matrix might look like:

| senior | lecturer | teaching | professor | assistant | associate | |

|---|---|---|---|---|---|---|

| senior lecturer | 1 | 1 | 0 | 0 | 0 | 0 |

| assistant teaching professor | 0 | 0 | 1 | 1 | 1 | 0 |

| associate professor | 0 | 0 | 0 | 1 | 0 | 1 |

| senior assistant to the assistant professor | 1 | 0 | 0 | 1 | 2 | 0 |

Then, we can make statements like: "assistant teaching professor" is more similar to "associate professor" than to "senior lecturer".

Creating a counts matrix¶

First, we need to determine all words that are used across all job titles.

jobtitles.str.split()

0 [city, attorney]

1 [mayor]

2 [assistant, police, chief]

...

12828 [police, officer]

12829 [police, dispatcher]

12830 [police, officer]

Name: Job Title, Length: 12831, dtype: object

# The .explode method concatenates the lists together into a single Series.

all_words = jobtitles.str.split().explode()

all_words

0 city

0 attorney

1 mayor

...

12829 dispatcher

12830 police

12830 officer

Name: Job Title, Length: 30064, dtype: object

Next, to determine the columns of our matrix, we need to find a list of all unique words used in titles. We can do this with np.unique, but value_counts shows us the distribution, which is interesting.

unique_words = all_words.value_counts()

unique_words

Job Title

police 2299

officer 1608

assistant 1267

...

gardener 1

governmental 1

dna 1

Name: count, Length: 332, dtype: int64

Note that in unique_words.index, job titles are sorted by number of occurrences!

For each of the 330 unique words that are used in job titles, we can count the number of occurrences of the word in each job title.

'deputy fire chief'contains the word'deputy'once, the word'fire'once, and the word'chief'once.'assistant managers assistant'contains the word'assistant'twice and the word'managers'once.

# Created using a dictionary to avoid a "DataFrame is highly fragmented" warning.

counts_dict = {}

for word in unique_words.index:

re_pat = fr'\b{word}\b'

counts_dict[word] = jobtitles.str.count(re_pat)

counts_df = pd.DataFrame(counts_dict).set_index(jobtitles)

counts_df.head()

| police | officer | assistant | fire | ... | stores | gardener | governmental | dna | |

|---|---|---|---|---|---|---|---|---|---|

| Job Title | |||||||||

| city attorney | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 |

| mayor | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 |

| assistant police chief | 1 | 0 | 1 | 0 | ... | 0 | 0 | 0 | 0 |

| police sergeant | 1 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 |

| assistant retirement administrator | 0 | 0 | 1 | 0 | ... | 0 | 0 | 0 | 0 |

5 rows × 332 columns

counts_df.shape

(12831, 332)

counts_df has one row for all 12831 employees, and one column for each unique word that is used in a job title.

Its third row, for example, tells us that the third job title contains 'police' once and 'assistant' once.

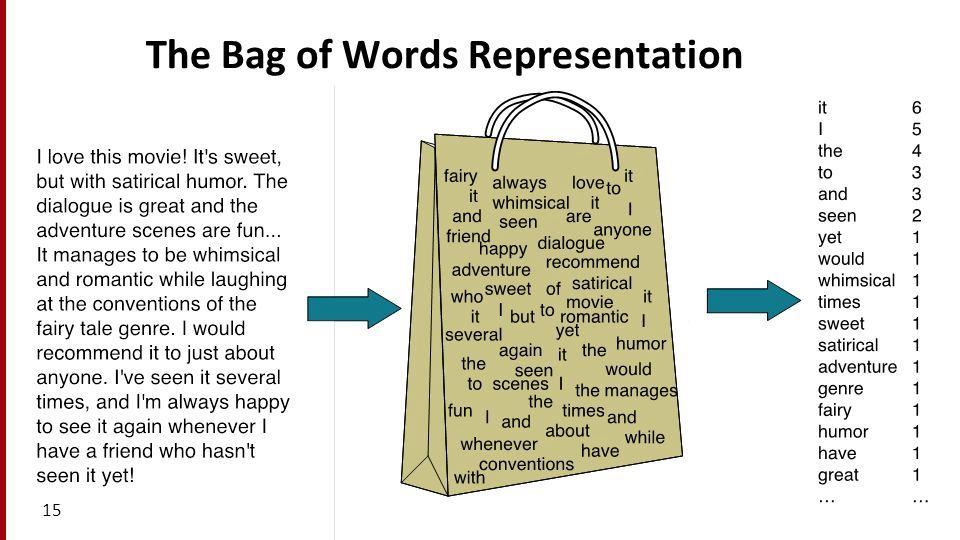

Bag of words¶

- The bag of words model represents texts (e.g. job titles, sentences, documents) as vectors of word counts.

- The "counts" matrices we have worked with so far were created using the bag of words model.

- The bag of words model defines a vector space in $\mathbb{R}^{\text{number of unique words}}$.

- In the matrix on the previous slide, each row was a vector corresponding to a specific job title.

- It is called "bag of words" because it doesn't consider order.

Cosine similarity¶

Question: Which job titles are most similar to 'deputy fire chief'?¶

- Remember, our idea was to count the number of shared words between two job titles.

- We now have access to

counts_df, which contains a row vector for each job title.

- How can we use it to count the number of shared words between two job titles?

Counting shared words¶

To start, let's compare the row vectors for 'deputy fire chief' and 'fire battalion chief'.

dfc = counts_df.loc['deputy fire chief'].iloc[0]

dfc

police 0

officer 0

assistant 0

..

gardener 0

governmental 0

dna 0

Name: deputy fire chief, Length: 332, dtype: int64

fbc = counts_df.loc['fire battalion chief'].iloc[0]

fbc

police 0

officer 0

assistant 0

..

gardener 0

governmental 0

dna 0

Name: fire battalion chief, Length: 332, dtype: int64

We can stack these two vectors horizontally.

pair_counts = (

pd.concat([dfc, fbc], axis=1)

.sort_values(by=['deputy fire chief', 'fire battalion chief'], ascending=False)

.head(10)

.T

)

pair_counts

| fire | chief | deputy | battalion | ... | assistant | engineer | civil | technician | |

|---|---|---|---|---|---|---|---|---|---|

| deputy fire chief | 1 | 1 | 1 | 0 | ... | 0 | 0 | 0 | 0 |

| fire battalion chief | 1 | 1 | 0 | 1 | ... | 0 | 0 | 0 | 0 |

2 rows × 10 columns

'deputy fire chief' and 'fire battalion chief' have 2 shared words in common. One way to arrive at this result mathematically is by taking their dot product:

np.dot(pair_counts.iloc[0], pair_counts.iloc[1])

2

Recall: The dot product¶

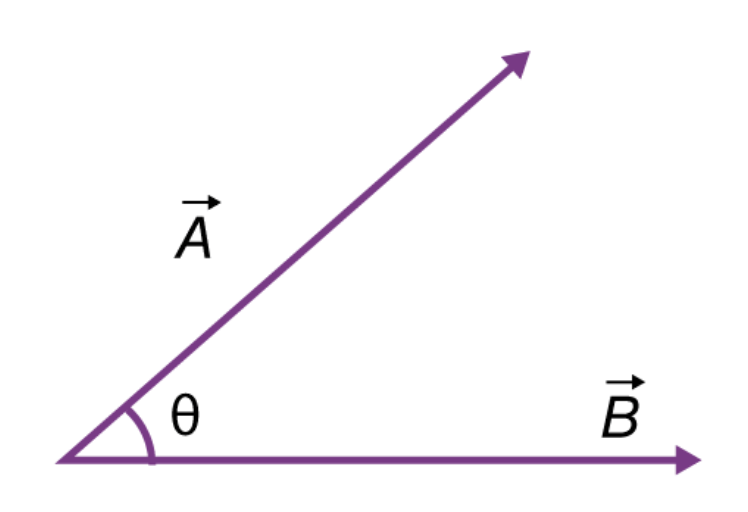

- Recall, if $\vec{a} = \begin{bmatrix} a_1 & a_2 & ... & a_n \end{bmatrix}^T$ and $\vec{b} = \begin{bmatrix} b_1 & b_2 & ... & b_n \end{bmatrix}^T$ are two vectors, then their dot product $\vec{a} \cdot \vec{b}$ is defined as:

$$\vec{a} \cdot \vec{b} = a_1b_1 + a_2b_2 + ... + a_nb_n$$

- The dot product also has a geometric interpretation. If $|\vec{a}|$ and $|\vec{b}|$ are the $L_2$ norms (lengths) of $\vec{a}$ and $\vec{b}$, and $\theta$ is the angle between $\vec{a}$ and $\vec{b}$, then:

$$\vec{a} \cdot \vec{b} = |\vec{a}| |\vec{b}| \cos \theta$$

(source)

(source)- $\cos \theta$ is equal to its maximum value (1) when $\theta = 0$, i.e. when $\vec{a}$ and $\vec{b}$ point in the same direction.

Cosine similarity and bag of words¶

To measure the similarity between two word vectors, instead of just counting the number of shared words, we should compute their normalized dot product, also known as their cosine similarity.

$$\cos \theta = \boxed{\frac{\vec{a} \cdot \vec{b}}{|\vec{a}| | \vec{b}|}}$$

- If all elements in $\vec{a}$ and $\vec{b}$ are non-negative, then $\cos \theta$ ranges from 0 to 1.

- 🚨 Key idea: The larger $\cos \theta$ is, the more similar the two vectors are!

- It is important to normalize by the lengths of the vectors, otherwise texts with more words will have artificially high similarities with other texts.

Normalizing¶

$$\cos \theta = \boxed{\frac{\vec{a} \cdot \vec{b}}{|\vec{a}| | \vec{b}|}}$$

- Why can't we just use the dot product – that is, why must we divide by $|\vec{a}| | \vec{b}|$?

- Consider the following example:

| big | data | science | |

|---|---|---|---|

| big big big big data | 4 | 1 | 0 |

| big data science | 1 | 1 | 1 |

| science big data | 1 | 1 | 1 |

| Pair | Dot Product | Cosine Similarity |

|---|---|---|

| big data science and big big big big data | 5 | 0.7001 |

| big data science and science big data | 3 | 1 |

'big big big big data'has a large dot product with'big data science'just because it has the word'big'four times. But intuitively,'big data science'and'science big data'should be as similar as possible, since they're permutations of the same phrase.

- So, make sure to compute the cosine similarity – don't just use the dot product!

Note: Sometimes, you will see the cosine distance being used. It is the complement of cosine similarity:

$$\text{dist}(\vec{a}, \vec{b}) = 1 - \cos \theta$$

If $\text{dist}(\vec{a}, \vec{b})$ is small, the two word vectors are similar.

A recipe for computing similarities¶

Given a set of documents, to find the most similar text to one document $d$ in particular:

- Use the bag of words model to create a counts matrix, in which:

- there is 1 row per document,

- there is 1 column per unique word that is used across documents, and

- the value in row

docand columnwordis the number of occurrences ofwordindoc.

- Compute the cosine similarity between $d$'s row vector and all other documents' row vectors.

- The other document with the greatest cosine similarity is the most similar, under the bag of words model.

Example: Global warming 🌎¶

Consider the following three documents.

sentences = pd.Series([

'I really really want global peace',

'I must enjoy global warming',

'We must solve climate change'

])

sentences

0 I really really want global peace 1 I must enjoy global warming 2 We must solve climate change dtype: object

Let's represent each document using the bag of words model.

unique_words = sentences.str.split().explode().value_counts()

unique_words

I 2

really 2

global 2

..

solve 1

climate 1

change 1

Name: count, Length: 12, dtype: int64

counts_dict = {}

for word in unique_words.index:

re_pat = fr'\b{word}\b'

counts_dict[word] = sentences.str.count(re_pat)

counts_df = pd.DataFrame(counts_dict).set_index(sentences)

counts_df

| I | really | global | must | ... | We | solve | climate | change | |

|---|---|---|---|---|---|---|---|---|---|

| I really really want global peace | 1 | 2 | 1 | 0 | ... | 0 | 0 | 0 | 0 |

| I must enjoy global warming | 1 | 0 | 1 | 1 | ... | 0 | 0 | 0 | 0 |

| We must solve climate change | 0 | 0 | 0 | 1 | ... | 1 | 1 | 1 | 1 |

3 rows × 12 columns

Let's now find the cosine similarity between each pair of documents.

counts_df

| I | really | global | must | ... | We | solve | climate | change | |

|---|---|---|---|---|---|---|---|---|---|

| I really really want global peace | 1 | 2 | 1 | 0 | ... | 0 | 0 | 0 | 0 |

| I must enjoy global warming | 1 | 0 | 1 | 1 | ... | 0 | 0 | 0 | 0 |

| We must solve climate change | 0 | 0 | 0 | 1 | ... | 1 | 1 | 1 | 1 |

3 rows × 12 columns

def sim_pair(s1, s2):

return np.dot(s1, s2) / (np.linalg.norm(s1) * np.linalg.norm(s2))

# Look at the documentation of the .corr method to see how this works!

counts_df.T.corr(sim_pair)

| I really really want global peace | I must enjoy global warming | We must solve climate change | |

|---|---|---|---|

| I really really want global peace | 1.00 | 0.32 | 0.0 |

| I must enjoy global warming | 0.32 | 1.00 | 0.2 |

| We must solve climate change | 0.00 | 0.20 | 1.0 |

Issue: Bag of words only encodes the words that each document uses, not their meanings.

- "I really really want global peace" and "We must solve climate change" have similar meanings, but have no shared words, and thus a low cosine similarity.

- "I really really want global peace" and "I must enjoy global warming" have very different meanings, but a relatively high cosine similarity.

Pitfalls of the bag of words model¶

Remember, the key assumption underlying the bag of words model is that two documents are similar if they share many words in common.

- The bag of words model doesn't consider order.

- The job titles

'deputy fire chief'and'chief fire deputy'are treated as the same.

- The job titles

- The bag of words model doesn't consider the meaning of words.

'I love data science'and'I hate data science'share 75% of their words, but have very different meanings.

- The bag of words model treats all words as being equally important.

'deputy'and'fire'have the same importance, even though'fire'is probably more important in describing someone's job title.- Let's address this point.

TF-IDF¶

The importance of words¶

Issue: The bag of words model doesn't know which words are "important" in a document. Consider the following document:

How do we determine which words are important?

- Repetition of words indicates importance, but

- the most common words ("the", "of", "at") often don't have much meaning!

Goal: Find a way of quantifying the importance of a word in a document by balancing the above two factors, i.e. find the word that best summarizes a document.

Term frequency¶

- The term frequency of a word (term) $t$ in a document $d$, denoted $\text{tf}(t, d)$ is the proportion of words in document $d$ that are equal to $t$.

$$ \text{tf}(t, d)= \frac{\text{\# of occurrences of $t$ in $d$}}{\text{total \# of words in $d$}} $$

- Example: What is the term frequency of "billy" in the following document?

- Answer: $\frac{2}{10} = \frac15$.

- Intuition: Words that occur often within a document are important to the document's meaning.

- If $\text{tf}(t, d)$ is large, then word $t$ occurs often in $d$.

- If $\text{tf}(t, d)$ is small, then word $t$ does not occur often $d$.

- Issue: "the" also has a TF of $\frac15$, but it seems less important than "basket".

Inverse document frequency¶

- The inverse document frequency of a word $t$ in a set of documents $d_1, d_2, ...$ is

$$\text{idf}(t) = \log \left(\frac{\text{total \# of documents}}{\text{\# of documents in which $t$ appears}} \right)$$

- Example: What is the inverse document frequency of "basket" in the following three documents?

- "at the last game, the team scored basket after basket"

- "our team is on fire"

- "they only missed one basket"

- Answer: $\log \left(\frac{3}{2}\right) \approx 0.4055$.

- Intuition: If a word appears in every document (like "the", "of", "at"), it is probably not a good summary of any one document.

- If $\text{idf}(t)$ is large, then $t$ is rarely found in documents.

- If $\text{idf}(t)$ is small, then $t$ is commonly found in documents.

- Think of $\text{idf}(t)$ as the "rarity factor" of $t$ across documents – the larger $\text{idf}(t)$ is, the more rare $t$ is.

Intuition¶

$$\text{tf}(t, d) = \frac{\text{\# of occurrences of $t$ in $d$}}{\text{total \# of words in $d$}}$$

$$\text{idf}(t) = \log \left(\frac{\text{total \# of documents}}{\text{\# of documents in which $t$ appears}} \right)$$

Goal: Quantify how well word $t$ summarizes document $d$.

- If $\text{tf}(t, d)$ is small, then $t$ doesn't occur very often in $d$, so $t$ can't be a good summary of $d$.

- If $\text{idf}(t)$ is small, then $t$ occurs often amongst all documents, and so it is not a good summary of any one document.

- If $\text{tf}(t, d)$ and $\text{idf}(t)$ are both large, then $t$ occurs often in $d$ but rarely overall. This makes $t$ a good summary of document $d$.

Term frequency-inverse document frequency¶

The term frequency-inverse document frequency (TF-IDF) of word $t$ in document $d$ is the product:

$$ \begin{align*} \text{tfidf}(t, d) &= \text{tf}(t, d) \cdot \text{idf}(t) \\\ &= \frac{\text{\# of occurrences of $t$ in $d$}}{\text{total \# of words in $d$}} \cdot \log \left(\frac{\text{total \# of documents}}{\text{\# of documents in which $t$ appears}} \right) \end{align*} $$

- If $\text{tfidf}(t, d)$ is large, then $t$ is a good summary of $d$, because $t$ occurs often in $d$ but rarely across all documents.

- TF-IDF is a heuristic – it has no probabilistic justification.

- To know if $\text{tfidf}(t, d)$ is large for one particular word $t$, we need to compare it to $\text{tfidf}(t_i, d)$, for several different words $t_i$.

Computing TF-IDF¶

Question: What is the TF-IDF of "global" in the second sentence?

sentences

0 I really really want global peace 1 I must enjoy global warming 2 We must solve climate change dtype: object

Answer:

tf = sentences.iloc[1].count('global') / len(sentences.iloc[1].split())

tf

0.2

idf = np.log(len(sentences) / sentences.str.contains('global').sum())

idf

0.4054651081081644

tf * idf

0.08109302162163289

Question: Is this big or small? Is "global" the best summary of the second sentence?

TF-IDF of all words in all documents¶

On its own, the TF-IDF of a word in a document doesn't really tell us anything; we must compare it to TF-IDFs of other words in that same document.

sentences

0 I really really want global peace 1 I must enjoy global warming 2 We must solve climate change dtype: object

unique_words = np.unique(sentences.str.split().explode())

unique_words

array(['I', 'We', 'change', 'climate', 'enjoy', 'global', 'must', 'peace',

'really', 'solve', 'want', 'warming'], dtype=object)

tfidf_dict = {}

for word in unique_words:

re_pat = fr'\b{word}\b'

tf = sentences.str.count(re_pat) / sentences.str.split().str.len()

idf = np.log(len(sentences) / sentences.str.contains(re_pat).sum())

tfidf_dict[word] = tf * idf

tfidf = pd.DataFrame(tfidf_dict).set_index(sentences)

tfidf

| I | We | change | climate | ... | really | solve | want | warming | |

|---|---|---|---|---|---|---|---|---|---|

| I really really want global peace | 0.07 | 0.00 | 0.00 | 0.00 | ... | 0.37 | 0.00 | 0.18 | 0.00 |

| I must enjoy global warming | 0.08 | 0.00 | 0.00 | 0.00 | ... | 0.00 | 0.00 | 0.00 | 0.22 |

| We must solve climate change | 0.00 | 0.22 | 0.22 | 0.22 | ... | 0.00 | 0.22 | 0.00 | 0.00 |

3 rows × 12 columns

Interpreting TF-IDFs¶

display_df(tfidf, cols=12)

| I | We | change | climate | enjoy | global | must | peace | really | solve | want | warming | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I really really want global peace | 0.07 | 0.00 | 0.00 | 0.00 | 0.00 | 0.07 | 0.00 | 0.18 | 0.37 | 0.00 | 0.18 | 0.00 |

| I must enjoy global warming | 0.08 | 0.00 | 0.00 | 0.00 | 0.22 | 0.08 | 0.08 | 0.00 | 0.00 | 0.00 | 0.00 | 0.22 |

| We must solve climate change | 0.00 | 0.22 | 0.22 | 0.22 | 0.00 | 0.00 | 0.08 | 0.00 | 0.00 | 0.22 | 0.00 | 0.00 |

The above DataFrame tells us that:

- the TF-IDF of

'really'in the first sentence is $\approx$ 0.37, - the TF-IDF of

'climate'in the second sentence is 0.

Note that there are two ways that $\text{tfidf}(t, d) = \text{tf}(t, d) \cdot \text{idf}(t)$ can be 0:

- If $t$ appears in every document, because then $\text{idf}(t) = \log (\frac{\text{\# documents}}{\text{\# documents}}) = \log(1) = 0$.

- If $t$ does not appear in document $d$, because then $\text{tf}(t, d) = \frac{0}{\text{len}(d)} = 0$.

The word that best summarizes a document is the word with the highest TF-IDF for that document:

display_df(tfidf, cols=12)

| I | We | change | climate | enjoy | global | must | peace | really | solve | want | warming | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I really really want global peace | 0.07 | 0.00 | 0.00 | 0.00 | 0.00 | 0.07 | 0.00 | 0.18 | 0.37 | 0.00 | 0.18 | 0.00 |

| I must enjoy global warming | 0.08 | 0.00 | 0.00 | 0.00 | 0.22 | 0.08 | 0.08 | 0.00 | 0.00 | 0.00 | 0.00 | 0.22 |

| We must solve climate change | 0.00 | 0.22 | 0.22 | 0.22 | 0.00 | 0.00 | 0.08 | 0.00 | 0.00 | 0.22 | 0.00 | 0.00 |

tfidf.idxmax(axis=1)

I really really want global peace really I must enjoy global warming enjoy We must solve climate change We dtype: object

Look closely at the rows of tfidf – in documents 2 and 3, the max TF-IDF is not unique!

Example: State of the Union addresses 🎤¶

State of the Union addresses¶

The 2026 State of the Union address is scheduled for February 24, 2026. This is an annual speech delivered by the President to Congress, with updates on the current state of the nation.

The data¶

from pathlib import Path

sotu_txt = Path('data') / 'stateoftheunion1790-2025.txt'

sotu = sotu_txt.read_text()

len(sotu)

10682907

The entire corpus (another word for "set of documents") is over 10 million characters long... let's not display it in our notebook.

print(sotu[:1500])

The Project Gutenberg EBook of Complete State of the Union Addresses, from 1790 to the Present. Speeches beginning in 2002 are from UCSB The American Presidency Project. Speeches from 2018-2024 were manually downloaded from whitehouse.gov or https://www.presidency.ucsb.edu/documents/app-categories/spoken-addresses-and-remarks/presidential/state-the-union-addresses. Character set encoding: UTF8 The addresses are separated by three asterisks CONTENTS George Washington, State of the Union Address, January 8, 1790 George Washington, State of the Union Address, December 8, 1790 George Washington, State of the Union Address, October 25, 1791 George Washington, State of the Union Address, November 6, 1792 George Washington, State of the Union Address, December 3, 1793 George Washington, State of the Union Address, November 19, 1794 George Washington, State of the Union Address, December 8, 1795 George Washington, State of the Union Address, December 7, 1796 John Adams, State of the Union Address, November 22, 1797 John Adams, State of the Union Address, December 8, 1798 John Adams, State of the Union Address, December 3, 1799 John Adams, State of the Union Address, November 11, 1800 Thomas Jefferson, State of the Union Address, December 8, 1801 Thomas Jefferson, State of the Union Address, December 15, 1802 Thomas Jefferson, State of the Union Address, October 17, 1803 Thomas Jefferson, State of the Union Address, November 8, 1804 Thomas Jeffer

Each speech is separated by '***'.

speeches = sotu.split('\n***\n')[1:]

len(speeches)

235

Note that each "speech" currently contains other information, like the name of the president and the date of the address.

print(speeches[-1][:1000])

State of the Union Address Donald J. Trump March 4, 2025 Speaker Johnson, Vice President Vance, the first lady of the United States, members of the United States Congress, thank you very much and to my fellow citizens: America is back. Six weeks ago, I stood beneath the dome of this Capitol and proclaimed the dawn of the Golden Age of America. From that moment on, it has been nothing but swift and unrelenting action to usher in the greatest and most successful era in the history of our country. We have accomplished more in 43 days than most administrations accomplished in 4 years, 8 years — and we are just getting started. I return to this chamber tonight to report that America's momentum is back. Our spirit is back. Our pride is back. Our confidence is back. And the American Dream is surging bigger and better than ever before.The American Dream is unstoppable, and our country is on the verge of a comeback the likes of which the world has never witnessed, and perhaps will never wit

Let's extract just the speech text.

import re

def extract_struct(speech):

L = speech.strip().split('\n', maxsplit=3)

L[3] = re.sub(r"[^A-Za-z' ]", ' ', L[3]).lower()

return dict(zip(['speech', 'president', 'date', 'contents'], L))

speeches_df = pd.DataFrame(list(map(extract_struct, speeches)))

speeches_df

| speech | president | date | contents | |

|---|---|---|---|---|

| 0 | State of the Union Address | George Washington | January 8, 1790 | fellow citizens of the senate and house of re... |

| 1 | State of the Union Address | George Washington | December 8, 1790 | fellow citizens of the senate and house of re... |

| 2 | State of the Union Address | George Washington | October 25, 1791 | fellow citizens of the senate and house of re... |

| ... | ... | ... | ... | ... |

| 232 | State of the Union Address | Joseph R. Biden Jr. | February 7, 2023 | mr speaker madam vice president our firs... |

| 233 | State of the Union Address | Joseph R. Biden Jr. | March 7, 2024 | before speaking the president presented his... |

| 234 | State of the Union Address | Donald J. Trump | March 4, 2025 | speaker johnson vice president vance the f... |

235 rows × 4 columns

Finding the most important words in each speech¶

Here, a "document" is a speech. We have 235 documents.

speeches_df

| speech | president | date | contents | |

|---|---|---|---|---|

| 0 | State of the Union Address | George Washington | January 8, 1790 | fellow citizens of the senate and house of re... |

| 1 | State of the Union Address | George Washington | December 8, 1790 | fellow citizens of the senate and house of re... |

| 2 | State of the Union Address | George Washington | October 25, 1791 | fellow citizens of the senate and house of re... |

| ... | ... | ... | ... | ... |

| 232 | State of the Union Address | Joseph R. Biden Jr. | February 7, 2023 | mr speaker madam vice president our firs... |

| 233 | State of the Union Address | Joseph R. Biden Jr. | March 7, 2024 | before speaking the president presented his... |

| 234 | State of the Union Address | Donald J. Trump | March 4, 2025 | speaker johnson vice president vance the f... |

235 rows × 4 columns

A rough sketch of what we'll compute:

for each word t:

for each speech d:

compute tfidf(t, d)

unique_words = speeches_df['contents'].str.split().explode().value_counts()

# Take the top 1000 most common words for speed

unique_words = unique_words.iloc[:1000].index

unique_words

Index(['the', 'of', 'and', 'to', 'in', 'a', 'that', 'for', 'be', 'our',

...

'medical', 'goods', 'application', 'farmers', 'interstate', 'expressed',

'amendment', 'earth', 'opportunities', 'understanding'],

dtype='object', name='contents', length=1000)

💡 Pro-Tip: Using tqdm¶

This code takes a while to run, so we'll use the tdqm package to track its progress. (Install with mamba install tqdm if needed).

from tqdm.notebook import tqdm

tfidf_dict = {}

tf_denom = speeches_df['contents'].str.split().str.len()

# Wrap the sequence with `tqdm()` to display a progress bar

for word in tqdm(unique_words):

re_pat = fr' {word} ' # Imperfect pattern for speed.

tf = speeches_df['contents'].str.count(re_pat) / tf_denom

idf = np.log(len(speeches_df) / speeches_df['contents'].str.contains(re_pat).sum())

tfidf_dict[word] = tf * idf

0%| | 0/1000 [00:00<?, ?it/s]

tfidf = pd.DataFrame(tfidf_dict)

tfidf.sample(10)

| the | of | and | to | ... | amendment | earth | opportunities | understanding | |

|---|---|---|---|---|---|---|---|---|---|

| 178 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.00e+00 | 1.55e-04 | 8.06e-04 | 0.00e+00 |

| 32 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.00e+00 | 0.00e+00 | 0.00e+00 | 0.00e+00 |

| 71 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 5.12e-04 | 4.93e-05 | 0.00e+00 | 5.90e-05 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 184 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.00e+00 | 2.79e-04 | 1.81e-04 | 0.00e+00 |

| 179 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.00e+00 | 0.00e+00 | 1.98e-04 | 0.00e+00 |

| 158 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.00e+00 | 1.29e-04 | 0.00e+00 | 0.00e+00 |

10 rows × 1000 columns

Note that the TF-IDFs of many common words are all 0!

Summarizing speeches¶

By using idxmax, we can find the word with the highest TF-IDF in each speech.

summaries = tfidf.idxmax(axis=1)

summaries

0 object

1 objects

2 objects

...

232 let's

233 i'm

234 it's

Length: 235, dtype: object

What if we want to see the 10 words with the highest TF-IDFs, for each speech?

def ten_largest(row):

return ', '.join(row.index[row.argsort()][-10:])

keywords = tfidf.apply(ten_largest, axis=1)

keywords_df = pd.concat([

speeches_df['president'],

speeches_df['date'],

keywords

], axis=1)

keywords_df

| president | date | 0 | |

|---|---|---|---|

| 0 | George Washington | January 8, 1790 | directed, various, proper, particularly, satis... |

| 1 | George Washington | December 8, 1790 | seems, commerce, western, revenues, convention... |

| 2 | George Washington | October 25, 1791 | lands, circumstances, satisfaction, completed,... |

| ... | ... | ... | ... |

| 232 | Joseph R. Biden Jr. | February 7, 2023 | workers, percent, we've, job, jobs, that's, to... |

| 233 | Joseph R. Biden Jr. | March 7, 2024 | we've, percent, thank, tonight, get, we're, do... |

| 234 | Donald J. Trump | March 4, 2025 | million, job, that's, tonight, i'm, don't, goi... |

235 rows × 3 columns

Uncomment the cell below to see every single row of keywords_df.

#display_df(keywords_df, rows=235)

Aside: What if we remove the $\log$ from $\text{idf}(t)$?¶

Let's try it and see what happens.

tfidf_nl_dict = {}

tf_denom = speeches_df['contents'].str.split().str.len()

for word in tqdm(unique_words):

re_pat = fr' {word} ' # Imperfect pattern for speed.

tf = speeches_df['contents'].str.count(re_pat) / tf_denom

idf_nl = len(speeches_df) / speeches_df['contents'].str.contains(re_pat).sum()

tfidf_nl_dict[word] = tf * idf_nl

0%| | 0/1000 [00:00<?, ?it/s]

tfidf_nl = pd.DataFrame(tfidf_nl_dict)

tfidf_nl.head()

| the | of | and | to | ... | amendment | earth | opportunities | understanding | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.09 | 0.06 | 0.04 | 0.05 | ... | 0.0 | 0.0 | 0.0 | 0.0 |

| 1 | 0.09 | 0.06 | 0.03 | 0.03 | ... | 0.0 | 0.0 | 0.0 | 0.0 |

| 2 | 0.11 | 0.07 | 0.03 | 0.04 | ... | 0.0 | 0.0 | 0.0 | 0.0 |

| 3 | 0.09 | 0.07 | 0.03 | 0.04 | ... | 0.0 | 0.0 | 0.0 | 0.0 |

| 4 | 0.09 | 0.07 | 0.02 | 0.04 | ... | 0.0 | 0.0 | 0.0 | 0.0 |

5 rows × 1000 columns

keywords_nl = tfidf_nl.apply(ten_largest, axis=1)

keywords_nl_df = pd.concat([

speeches_df['president'],

speeches_df['date'],

keywords_nl

], axis=1)

keywords_nl_df

| president | date | 0 | |

|---|---|---|---|

| 0 | George Washington | January 8, 1790 | will, that, which, in, be, a, and, to, of, the |

| 1 | George Washington | December 8, 1790 | be, as, a, it, which, in, and, to, of, the |

| 2 | George Washington | October 25, 1791 | as, that, be, which, in, a, and, to, of, the |

| ... | ... | ... | ... |

| 232 | Joseph R. Biden Jr. | February 7, 2023 | it's, in, we, a, of, we're, let's, and, to, the |

| 233 | Joseph R. Biden Jr. | March 7, 2024 | i, in, it's, a, that's, of, i'm, to, and, the |

| 234 | Donald J. Trump | March 4, 2025 | our, in, thank, a, it's, of, we're, to, the, and |

235 rows × 3 columns

The role of $\log$ in $\text{idf}(t)$¶

$$ \begin{align*} \text{tfidf}(t, d) &= \text{tf}(t, d) \cdot \text{idf}(t) \\\ &= \frac{\text{\# of occurrences of $t$ in $d$}}{\text{total \# of words in $d$}} \cdot \log \left(\frac{\text{total \# of documents}}{\text{\# of documents in which $t$ appears}} \right) \end{align*} $$

- Remember, for any positive input $x$, $\log(x)$ is (much) smaller than $x$.

- In $\text{idf}(t)$, the $\log$ "dampens" the impact of the ratio $\frac{\text{\# documents}}{\text{\# documents with $t$}}$.

- If a word is very common, the ratio will be close to 1. The log of the ratio will be close to 0.

(1000 / 999)

1.001001001001001

np.log(1000 / 999)

0.001000500333583622

- If a word is very common (e.g. 'the'), removing the log multiplies the statistic by a large factor.

- If a word is very rare, the ratio will be very large. However, for instance, a word being seen in 2 out of 50 documents is not very different than being seen in 2 out of 500 documents (it is very rare in both cases), and so $\text{idf}(t)$ should be similar in both cases.

(50 / 2)

25.0

(500 / 2)

250.0

np.log(50 / 2)

3.2188758248682006

np.log(500 / 2)

5.521460917862246

Question 🤔

From the Fall 23 final: Consider the following corpus:

Document number Content

1 yesterday rainy today sunny

2 yesterday sunny today sunny

3 today rainy yesterday today

4 yesterday yesterday today today

- Using a bag-of-words representation, which two documents have the largest dot product?

- Using a bag-of-words representation, what is the cosine similarity between documents 2 and 3?

- Which words have a TF-IDF score of 0 for all four documents?

Summary, next time¶

Summary¶

- One way to turn documents, like

'deputy fire chief', into feature vectors, is to count the number of occurrences of each word in the document, ignoring order. This is done using the bag of words model. - To measure the similarity of two documents under the bag of words model, compute the cosine similarity of their two word vectors.

- Term frequency-inverse document frequency (TF-IDF) is a statistic that tries to quantify how important a word (term) is to a document. It balances:

- how often a word appears in a particular document, $\text{tf}(t, d)$, with

- how often a word appears across documents, $\text{idf}(t)$.

- For a given document, the word with the highest TF-IDF is thought to "best summarize" that document.

Next time¶

Modeling and feature engineering.